This is the third and last blog entry of how do we get the ONTAP final capacity.

In my first blog, we ran through a gamut of explanations how disk rightsizing came about for NetApp’s ONTAP. And the importance of disk rightsizing is to give ONTAP a level set of disks, regardless of manufacturer, model, make, firmware versions and so on, and ONTAP is pretty damn sure that the disks that it gets will not mess up.

In my second blog, progressing from the disk rightsizing stage, was the RAID group sizing stage, where different RAID group size affected the number of disks used for data and for parity in an aggregate. An aggregate, for the uninformed, is the disks pool in which the flexible volume, FlexVol, is derived. In a simple picture below,

OK, the diagram’s in Japanese (I am feeling a bit cheeky today :P)!

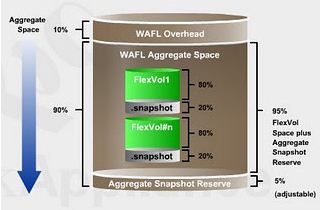

But it does look a bit self explanatory with some help which I shall provide now. If you start from the bottom of the picture, 16 x 300GB disks are combined together to create a RAID Group. And there are 4 RAID Groups created – rg0, rg1, rg2 and rg3. These RAID groups make up the ONTAP data structure called an aggregate. From ONTAP version 7.3 onward, there were some minor changes of how ONTAP reports capacity but fundamentally, it did not change much from previous versions of ONTAP. And also note that ONTAP takes a 10% overhead of the aggregate for its own use.

With the aggregate, the logical structure called the FlexVol is created. FlexVol can be as small as several megabytes to as large as 100TB, incremental by any size on-the-fly. This logical structure also allow shrinking of the capacity of the volume online and on-the-fly as well. Eventually, the volumes created from the aggregate become the next-building blocks of NetApp NFS and CIFS volumes and also LUNs for iSCSI and Fibre Channel. Also note that, for a more effective organization of logical structures from the volumes, using qtree is highly recommended for files and ONTAP management reasons.

However, for both aggregate and the FlexVol volumes created from the aggregate, snapshot reserve is recommended. The aggregate takes a 5% overhead of the capacity for snapshot reserve, while for every FlexVol volume, a 20% snapshot reserve is applied. While both snapshot percentage are adjustable, it is recommended to keep them as best practice (except for FlexVol volumes assigned for LUNs, which could be adjusted to 0%)

Note: Even if the snapshot reserve is adjusted to 0%, there are still some other rule sets for these LUNs that will further reduce the capacity. When dealing with NetApp engineers or pre-sales, ask them about space reservations and how they do snapshots for fat LUNs and thin LUNs and their best practices in these situations. Believe me, if you don’t ask, you will be very surprised of the final usable capacity allocated to your applications)

In a nutshell, the dissection of capacity after the aggregate would look like the picture below:

We can easily quantify the overall usable in the little formula that I use for some time:

Rightsized Disks capacity x # Disks x 0.90 x 0.95 = Total Aggregate Usable Capacity

Then remember that each volume takes a 20% snapshot reserve overhead. That’s what you have got to play with when it comes to the final usable capacity.

Though the capacity is not 100% accurate because there are many variables in play but it gives the customer a way to manually calculate their potential final usable capacity.

Please note the following best practices and this is only applied to 1 data aggregate only. For more aggregates, the same formula has to be applied again.

- A RAID-DP, 3-disk rootvol0, for the root volume is set aside and is not accounted for in usable capacity

- A rule-of-thumb of 2-disks hot spares is applied for every 30 disks

- The default RAID Group size is used, depending on the type of disk profile used

- Snapshot reserves default of 5% for aggregate and 20% per FlexVol volumes are applied

- Snapshots for LUNs are subjected to space reservation, either full or fractional. Note that there are considerations of 2x + delta and 1x + delta (ask your NetApp engineer) for iSCSI and Fibre Channel LUNs, even though snapshot reserves are adjusted to 0% and snapshots are likely to be turned off.